If you’ve ever thought about making games but assumed Linux wasn’t the right platform for it, think again! While Windows and macOS might dominate the game development scene, Linux has quietly built up an impressive toolkit for developers. Whether you’re an indie creator looking for open-source flexibility or a studio considering Linux support, the ecosystem has come a long way. From powerful game engines to robust development tools, Linux offers everything you need to build and test games. In this article, we’ll break down why Linux is worth considering, the best tools available, and how you can get started.

Why Choose Linux for Game Development?

If you’re wondering why anyone would develop games on Linux instead of Windows or macOS, the answer is simple: freedom, flexibility, and performance.

First off, Linux is open-source, which means you aren’t locked into a specific ecosystem. You can customize your entire development environment, from the desktop interface to the compiler settings. No forced updates, no bloated background processes eating up resources — just an efficient workspace built exactly how you like it.

Then there’s the stability and performance factor. Unlike Windows, which can sometimes feel sluggish with unnecessary background tasks, Linux runs lean. This is especially useful when you’re working with heavy game engines or compiling large

projects. It’s why so many servers and supercomputers use Linux — it just works.

Another big plus? Cost savings. Everything you need — IDEs, compilers, game engines, and creative tools — can be found for free. Instead of shelling out for expensive software licenses, you can reinvest that money into your project.

And let’s not forget about growing industry support. Unity, Unreal Engine, and Godot all support Linux, and with platforms like Steam Deck running Linux-based SteamOS, game development for Linux is more relevant than ever. Sure, it’s not as mainstream as Windows, but if you’re looking for a powerful, flexible, and budget-friendly development setup, Linux is definitely worth considering.

Best Game Engines for Linux

If you’re developing games on Linux, you’ll be happy to know that several powerful game engines fully support it. Here are some of the best options:

1. Unity – The Industry Standard

Unity is one of the most popular game engines out there, and yes, it supports Linux. The Unity Editor runs on Linux, though it’s still considered in “preview” mode. However, many game development companies like RetroStyle Games successfully use it for 2D and 3D game development. Plus, you can build games for multiple platforms, including Windows, macOS, mobile, and even consoles — all from Linux.

2. Unreal Engine – AAA-Quality Development

If you’re aiming for high-end graphics, Unreal Engine is a great choice. It officially supports Linux, and while the Linux version of the editor might not be as polished as the Windows one, it still gets the job done. Unreal’s powerful rendering and blueprint system make it a top pick for ambitious projects.

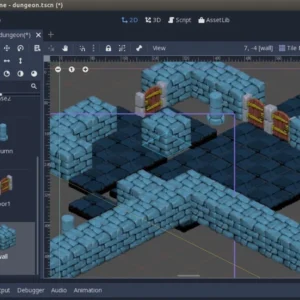

3. Godot – The Open-Source Powerhouse

If you love open-source software, Godot is a dream come true. It’s completely free, lightweight, and optimized for Linux. The engine supports both 2D and 3D game development and has its scripting language (GDScript) that’s easy to learn. Plus, since Godot itself is open-source, you can tweak the engine however you like.

4. Other Notable Mentions

Defold – A lightweight engine with strong 2D capabilities.

Love2D – Perfect for simple 2D games using Lua scripting.

Stride – A promising C#-based open-source engine.

Essential Tools for Linux Game Development

Once you’ve picked your game engine, you’ll need the right tools to bring your game to life. Luckily, Linux has everything you need, from coding and design to audio and version control.

1. Code Editors & IDEs

If you’re writing code, you need a solid editor. VS Code is a favorite among game developers, with great support for C#, Python, and other languages. If you prefer something more powerful, JetBrains Rider is a top-tier choice for Unity developers. For those who like minimalism, Vim or Neovim can be customized to perfection.

2. Graphics & Animation Tools

Linux has some fantastic tools for art and animation. Blender is the go-to for 3D modeling and animation, while Krita and GIMP are excellent for 2D art and textures. If you’re working with pixel art, Aseprite (open-source version) is a fantastic option.

3. Audio Tools

For sound effects and music, LMMS (like FL Studio but free) and Ardour (a powerful DAW) are solid choices. If you just need basic sound editing, Audacity is a lightweight but effective tool.

4. Version Control

You don’t want to lose hours of work due to a crash. That’s where Git comes in. You can use GitHub, GitLab, or Bitbucket to store your project, collaborate with teammates, and roll back to previous versions when needed.

With these tools, you’ll have everything you need to code, design, animate, and refine your game — all within Linux. And the best part? Most of them are free and open-source!

Setting Up a Linux Development Environment

Getting your Linux system ready for game development isn’t as complicated as it sounds. In fact, once you’ve set it up, you’ll have a lightweight, stable, and efficient workspace that’s perfect for coding, designing, and testing your game.

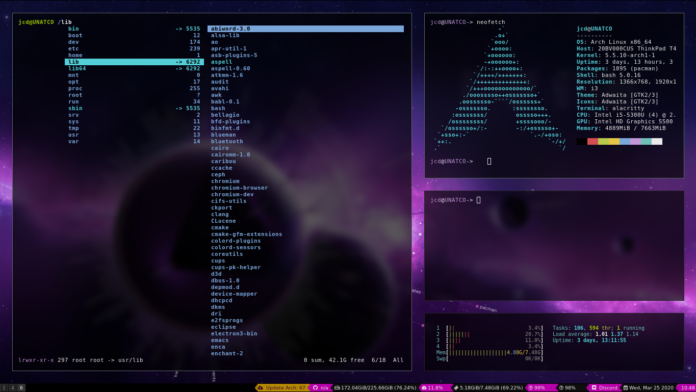

First step: Pick the Right Linux Distro: Not all Linux distributions (distros) are built the same, so choosing the right one can save you a lot of headaches. If you want ease of use, go with Ubuntu or Pop!_OS — both have great driver support and a massive community for troubleshooting. If you prefer cutting-edge software, Manjaro or Fedora are solid picks.

Second step: Install Essential Libraries & Dependencies: Depending on your game engine, you may need to install extra libraries. For example, if you’re using Unity, you’ll want Mono and .NET SDK. Unreal Engine requires Clang and some development packages. Most of these can be installed easily via the package manager:

sudo apt install build-essential git cmake

For Arch-based distros, you’d use:

sudo pacman -S base-devel git cmake

Third step: Set Up Your Game Engine: Most popular engines work on Linux, but the setup varies:

Unity: Download the Unity Hub (Linux version) and install the editor.

Unreal Engine: Requires compiling from source via GitHub.

Godot: Just download the binary, and you’re ready to go.

Fourth step: Configure Development Tools:

Install VS Code or JetBrains Rider for coding.

Set up Git for version control.

Building & Testing Games on Linux

Once you’ve got your game up and running in the engine, it’s time to build and test it. The good news? Linux makes this process smooth — especially if you’re targeting multiple platforms.

1. Compiling Your Game

Most game engines handle the build process automatically, but if you're using a custom engine or working with compiled languages like C++, you’ll need a good build system. CMake and Make are commonly used for managing builds, while GCC and Clang are solid compilers for performance-heavy games. To compile, you’d typically run:

cmake .

make

./yourgame

If you're working with Unity or Unreal, the built-in export tools will package your game for Linux, Windows, and more.

2. Performance Optimization

Linux is great for debugging because it doesn’t have as many background processes eating up resources. To monitor performance, you can use:

htop – For checking CPU and memory usage.

glxinfo | grep "OpenGL version" – To verify your GPU drivers.

Vulkan tools – If your game uses Vulkan for rendering.

3. Testing Across Different Hardware & Distros

Not all Linux systems are the same, so it’s a good idea to test your game on multiple distros. Tools like Flatpak and AppImage help create portable builds that work across different Linux versions. If you're planning to distribute on Steam its Proton compatibility layer can help test how well your game runs.

Challenges & Limitations

While Linux is a great platform for game development, it isn’t without its challenges. If you’re coming from Windows or macOS, you might run into a few roadblocks — but nothing that can’t be worked around.

Some industry-standard tools, like Adobe Photoshop, Autodesk Maya, and certain middleware, don’t have native Linux versions. Luckily, there are solid alternatives like GIMP, Krita, and Blender, but if you absolutely need a Windows-only tool, Wine or a virtual machine might be your best bet.

While Linux has come a long way with hardware support, GPU drivers can still be tricky. NVIDIA’s proprietary drivers work well but sometimes require extra setup, while AMD’s open-source drivers are generally more stable but may lag in some optimizations. If you’re using Vulkan, make sure your drivers are up to date for the best performance.

Linux gaming has grown, especially with Steam Deck and Proton, but it’s still a niche market. If you’re planning to sell a game, Windows and consoles should be your priority — Linux can be a nice bonus, but not the main target unless you’re making something for the open-source community.

Despite these challenges, many developers like RetroStyle Games successfully create games on Linux. The key is finding the right workflow and tools that work for you. And with the growing support from game engines and platforms, Linux game development is only getting better!

Conclusion

Conclusion

So, is Linux a good choice for game development? Absolutely — but with some caveats. If you value customization, performance, and open-source tools, Linux gives you everything you need to build amazing games. Plus, with engines like Unity, Unreal, and Godot supporting Linux, developing on this platform is more viable than ever.

That said, it isn’t all smooth sailing. You might have to tweak drivers, find alternatives to proprietary software, and troubleshoot compatibility issues. But if you’re willing to put in the effort, Linux rewards you with a fast, stable, and distraction-free development environment.

At the end of the day, whether Linux is right for you depends on your workflow and project needs. If you’re curious, why not set up a test environment and give it a shot? You might be surprised at how much you like it!

There are plenty of markup languages available. This page is written on HTML, a markup language. One of them is Markdown, a lightweight markup language that lets writers, developers, and website administrators to format text easily. In Markdown, one of the most used formatting features is italics. In Markdown italics is used to emphasize text, one of the reasons why HTML uses “em” in tag to indicate emphasis.

There are plenty of markup languages available. This page is written on HTML, a markup language. One of them is Markdown, a lightweight markup language that lets writers, developers, and website administrators to format text easily. In Markdown, one of the most used formatting features is italics. In Markdown italics is used to emphasize text, one of the reasons why HTML uses “em” in tag to indicate emphasis.

When you are setting up an SSD, one of the most important questions that you face is: which is the right partition style for me? There is a decision to make: MBR vs GPT SSD. MBR stands for Master Boot Record while GPT stands for GUID Partition table. This choice is important because the choice you make will determine the compatibility, performance, and future expansion options.

When you are setting up an SSD, one of the most important questions that you face is: which is the right partition style for me? There is a decision to make: MBR vs GPT SSD. MBR stands for Master Boot Record while GPT stands for GUID Partition table. This choice is important because the choice you make will determine the compatibility, performance, and future expansion options.

What is JSON Checker?

What is JSON Checker?

Typography isn’t just for designers—it plays a vital role in programming, terminal applications, system interfaces, and documentation readability. Whether you’re customizing your Linux desktop, developing a CLI tool, or enhancing your terminal experience, the right font can make all the difference.

Typography isn’t just for designers—it plays a vital role in programming, terminal applications, system interfaces, and documentation readability. Whether you’re customizing your Linux desktop, developing a CLI tool, or enhancing your terminal experience, the right font can make all the difference.

Conclusion

Conclusion

In today’s competitive digital landscape, small businesses need to leverage every tool and strategy available to stay relevant and grow. One such strategy is content marketing, which has proven to be an effective way to reach, engage, and convert potential customers. However, for many small business owners, managing content creation and distribution can be time-consuming and resource-intensive. This is where

In today’s competitive digital landscape, small businesses need to leverage every tool and strategy available to stay relevant and grow. One such strategy is content marketing, which has proven to be an effective way to reach, engage, and convert potential customers. However, for many small business owners, managing content creation and distribution can be time-consuming and resource-intensive. This is where

As a digital marketing agency, your focus is to provide high-quality services to your clients while ensuring that operations run smoothly. However, managing the various components of SEO, such as link-building, can be time-consuming and resource-draining. This is where white-label link-building services come into play. By outsourcing your link-building efforts, you can save time and resources, allowing your agency to focus on more strategic tasks that directly contribute to your clients’ success. Below, we’ll explore how these services can benefit your agency in terms of time and resource management.

As a digital marketing agency, your focus is to provide high-quality services to your clients while ensuring that operations run smoothly. However, managing the various components of SEO, such as link-building, can be time-consuming and resource-draining. This is where white-label link-building services come into play. By outsourcing your link-building efforts, you can save time and resources, allowing your agency to focus on more strategic tasks that directly contribute to your clients’ success. Below, we’ll explore how these services can benefit your agency in terms of time and resource management.

Kali Linux is a Debian-based, open-source operating system that’s ideal for penetration testing, reverse engineering, security auditing, and computer forensics. It’s a rolling release model, as multiple updates of the OS are available in a year, offering you access to a pool of advanced tools that keep your software secure. But how to update Kali Linux to the latest version to avoid risks and compatibility issues?

Kali Linux is a Debian-based, open-source operating system that’s ideal for penetration testing, reverse engineering, security auditing, and computer forensics. It’s a rolling release model, as multiple updates of the OS are available in a year, offering you access to a pool of advanced tools that keep your software secure. But how to update Kali Linux to the latest version to avoid risks and compatibility issues?

2. DeepCode

2. DeepCode

In today’s digital age, safeguarding your communication is paramount. Email encryption serves as a crucial tool to protect sensitive data from unauthorized access. Linux users, known for their preference for open-source solutions, must embrace encryption to ensure privacy and security.

In today’s digital age, safeguarding your communication is paramount. Email encryption serves as a crucial tool to protect sensitive data from unauthorized access. Linux users, known for their preference for open-source solutions, must embrace encryption to ensure privacy and security.

E-commerce sites are competing with countless competitors, so they must operate with speed, efficiency, and scalability to meet customer demands. Behind the scenes, many of these sites rely on Linux to keep their platforms running smoothly. From hosting to video adverts, Linux plays an important role in ensuring retail sites perform

E-commerce sites are competing with countless competitors, so they must operate with speed, efficiency, and scalability to meet customer demands. Behind the scenes, many of these sites rely on Linux to keep their platforms running smoothly. From hosting to video adverts, Linux plays an important role in ensuring retail sites perform

Understanding the Error

Understanding the Error

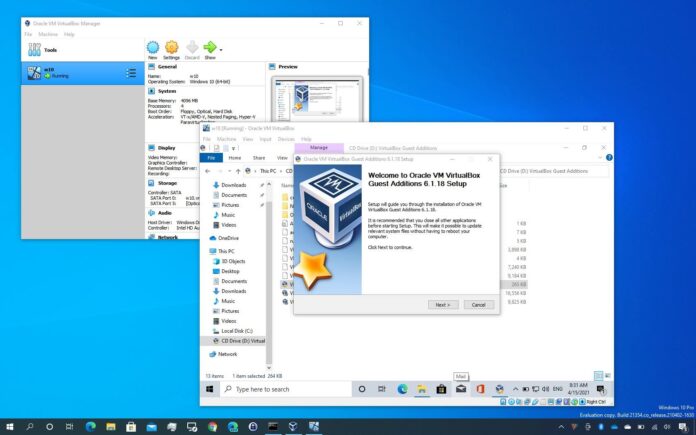

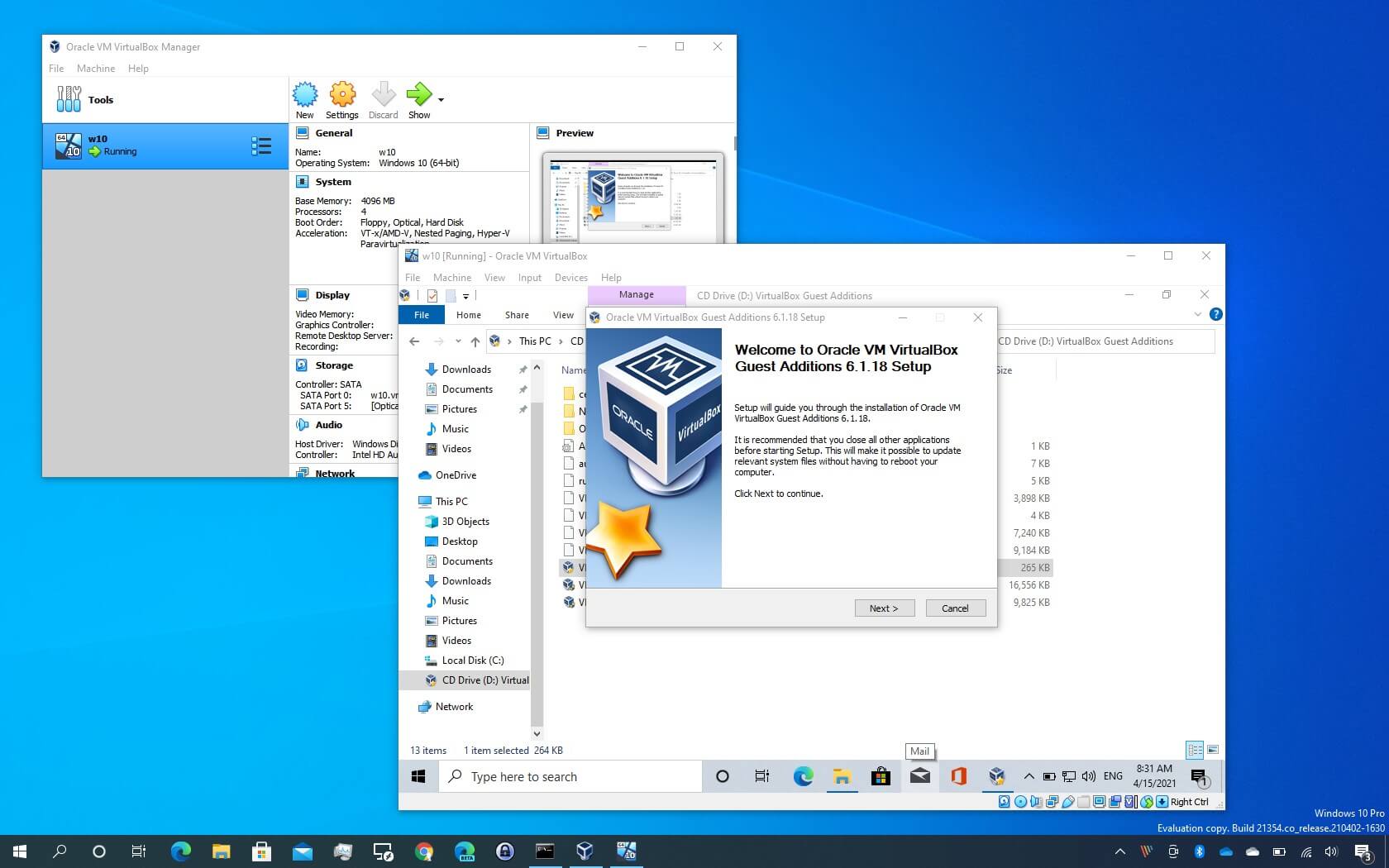

What are VirtualBox Guest Additions?

What are VirtualBox Guest Additions?

QR codes have revolutionized how we share information, offering a fast and efficient way to connect physical and digital worlds. In the Linux ecosystem, the adaptability of QR codes aligns seamlessly with the open-source philosophy, enabling developers, administrators, and users to integrate QR code functionality into various workflows. Leveraging a

QR codes have revolutionized how we share information, offering a fast and efficient way to connect physical and digital worlds. In the Linux ecosystem, the adaptability of QR codes aligns seamlessly with the open-source philosophy, enabling developers, administrators, and users to integrate QR code functionality into various workflows. Leveraging a